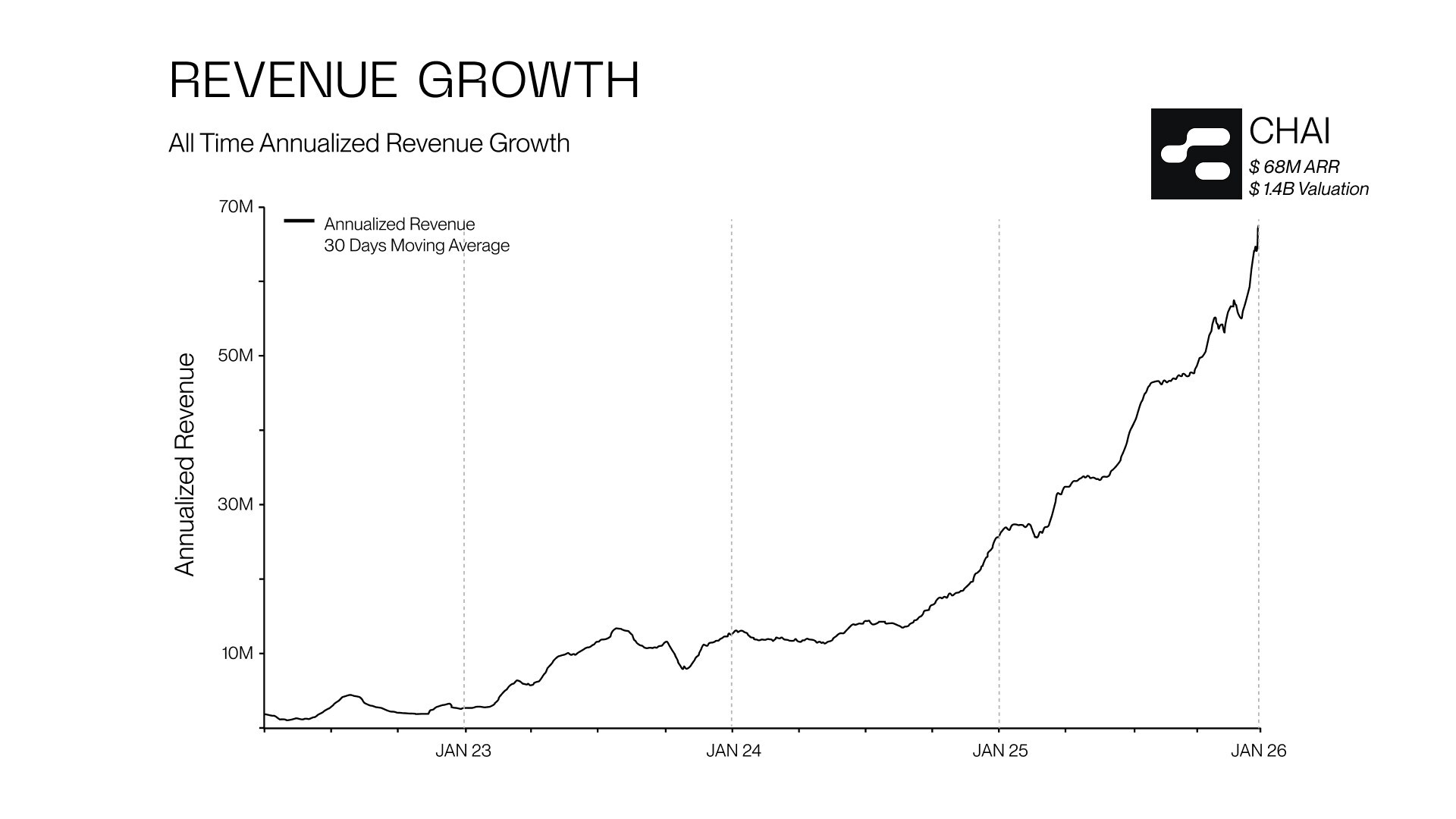

CHAI, a prominent player in the artificial intelligence sector, has announced significant growth with an impressive achievement of reaching an annual recurring revenue (ARR) of $68 million. This milestone, coupled with a valuation of $1.4 billion, reflects a remarkable threefold increase in revenue over the past three years. As the company expands, it emphasizes the critical responsibility of ensuring safety in its AI offerings.

On February 21, 2026, in a statement from its headquarters in Palo Alto, California, CHAI outlined its commitment to maintaining rigorous safety standards. The company recognizes that rapid growth brings challenges, particularly in developing and managing AI systems that operate ethically and benefit society.

Investment in Safety and Compliance

To address these challenges, CHAI has invested considerable resources in enhancing its platform’s security as it scales. The company implements comprehensive safety measures to protect users, ensuring adherence to global standards. Its protocols align with the requirements set by the EU AI Act and the NIST AI Risk Management Framework. These frameworks guide the responsible deployment of AI technologies, particularly concerning user safety.

CHAI also collaborates with the International Association for Suicide Prevention (IASP) to integrate protective measures for vulnerable individuals. The company advocates for a platform that generates content aligned with human values, employing a moderation system that filters harmful content. This proactive approach allows CHAI’s AI to serve as a compassionate resource for users in distress.

Advanced Measures for User Protection

CHAI has developed an advanced, real-time classifier designed to detect potential suicidal ideation or self-harm scenarios during active conversations. This tool enables early identification of unsafe behavior, allowing the company to initiate intervention strategies promptly. While the AI offers support, it is not a substitute for professional advice or medical care. Users expressing suicidal thoughts are encouraged to seek assistance from mental health professionals or helplines.

The platform prioritizes operational transparency by logging user interactions on secure servers. This data undergoes rigorous, anonymized reviews to identify potential risks without compromising user privacy. Employing standards similar to HIPAA, CHAI continuously refines its safety measures while respecting user confidentiality.

As the landscape of AI evolves, CHAI remains dedicated to scaling its infrastructure safely. The implementation of a robust AI safety framework reflects the company’s commitment to ethical practices and user protection. In a world where AI technology is increasingly integrated into daily life, CHAI aims to set a precedent in prioritizing user safety and responsible technology use.

For those interested in a deeper understanding of CHAI’s methodologies, further details can be found in the official paper titled “The Chai Platform’s AI Safety Framework,” available at: https://arxiv.org/abs/2306.02979. The company continues to collaborate with safety experts and stakeholders to ensure that user safety is at the forefront of its operations.