URGENT UPDATE: A groundbreaking study from the School of Cyber Science and Engineering at Southeast University and Purple Mountain Laboratories has just unveiled an innovative privacy-preserving scheme for secure neural network inference. With the rise of smart devices and cloud computing, the need for robust data privacy has never been more critical.

As users increasingly rely on cloud servers to process sensitive data, the risk of unauthorized access looms large. This new scheme, detailed in the paper titled, “Efficient Privacy-Preserving Scheme for Secure Neural Network Inference,” proposes a method designed to protect both user data and server models from potential breaches.

The research team, including authors Liquan CHEN, Zixuan YANG, Peng ZHANG, and Yang MA, utilized advanced techniques like homomorphic encryption and secure multi-party computation. Their approach allows for swift and accurate processing of encrypted data, ensuring privacy is maintained throughout the inference stages.

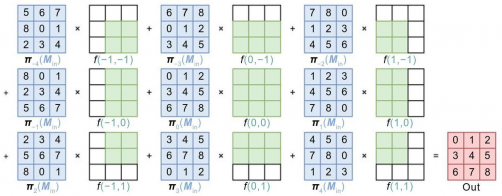

This innovative scheme divides the inference process into three streamlined stages: merging, preprocessing, and online inference. It introduces a unique network parameter merging approach that minimizes multiplication levels and reduces operations involving ciphertext and plaintext. A fast convolution algorithm further enhances computational efficiency, making the system not just secure, but also remarkably effective.

The technique leverages the CKKS homomorphic encryption algorithm to perform high-precision computations, merging consecutive linear layers to cut down on communication rounds and computational costs. The results are impressive, achieving 99.24% accuracy on the MNIST dataset and 90.26% on the Fashion-MNIST dataset.

Compared to established methods such as DELPHI, GAZELLE, and CryptoNets, this new scheme drastically improves performance. It reduces online-stage linear operation time by at least 11%, online-stage computational time by approximately 48%, and communication overhead by 66% when compared to non-merging approaches. These advancements mark a significant leap forward in the field of secure neural network inference.

The implications of this research are profound. With increasing concerns over data privacy in an era dominated by cloud services, this scheme provides new hope for users seeking secure ways to interact with smart technologies. As more devices connect to the internet, the safeguards offered by this research could play a crucial role in protecting personal information.

The full text of the study is available at https://doi.org/10.1631/FITEE.2400371, providing further insight into the methods and results that could reshape privacy standards in cloud computing.

WHAT’S NEXT: The research team anticipates further development and refinement of their methods, aiming to enhance privacy measures in an increasingly digital world. As this technology progresses, stakeholders in tech and cybersecurity will want to keep a close eye on its potential applications and real-world impact.

Stay tuned for updates on this urgent development in privacy-preserving technology.